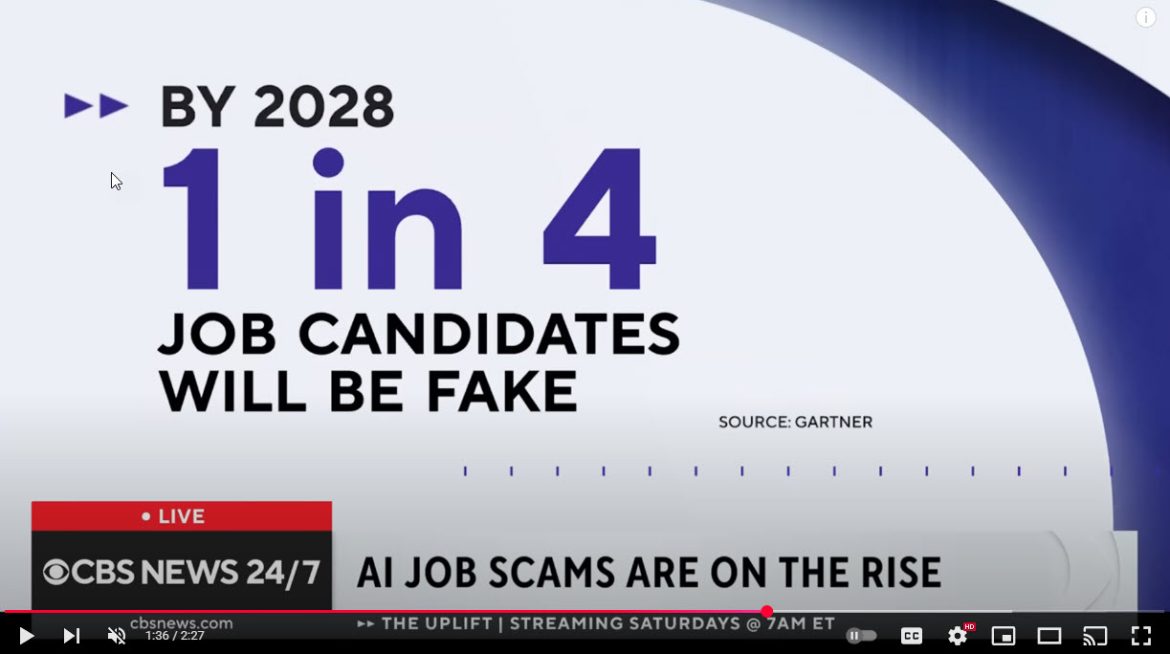

As artificial intelligence continues to transform industries, a new and deeply concerning trend is emerging in the job market: AI-powered bots are impersonating human job seekers. Companies across multiple sectors are reporting a rise in sophisticated employment scams in which bots — not real people — apply for jobs, attend virtual interviews, and even submit AI-generated work samples, blurring the line between automation and deception.

According to multiple human resources and cybersecurity firms, the issue is rapidly escalating. Fake applicants, often operating through AI avatars or text-based bots, are now capable of navigating pre-screening questions, tailoring resumes with alarming precision, and holding preliminary video interviews using deepfake technology. The goal is not always to land the job — some scams aim to gather insider information, gain unauthorized access to systems, or simply harvest identity data.

Recruiters and hiring managers say the impersonations are becoming increasingly difficult to detect. In some cases, AI-generated resumes match job descriptions with uncanny accuracy. In others, voice-cloned bots conduct phone interviews convincingly enough to pass through multiple hiring stages before being flagged.

Cybersecurity expert and recruiter Ashley Henshaw explains, “It’s no longer just about fake credentials or fraudulent references. These bots are running full campaigns — from resume to reference to real-time interviews — sometimes controlled by networks of scammers trying to access sensitive information or infiltrate a company.”

The trend is not limited to one industry. Companies in tech, finance, education, and even healthcare report being targeted. Remote-first businesses are especially vulnerable due to a lack of in-person verification and reliance on virtual hiring platforms. In some extreme cases, companies say they only discovered the deception after the “employee” was hired and began missing meetings, failing to perform, or behaving erratically in team chats.

To combat the threat, employers are now adopting advanced verification tools, including biometric screening, digital watermarking of credentials, AI-detection software during video interviews, and stricter background check protocols. Some are turning to third-party firms specializing in “anti-bot” recruitment security to audit their pipelines.

The Federal Trade Commission (FTC) has also issued warnings about the trend, citing a surge in both job applicant and employer impersonation scams. In many cases, not only are bots pretending to be job seekers, but scammers are creating fake job postings on behalf of well-known companies to trick real people into divulging personal information or paying fraudulent application fees.

The rise of AI impersonation raises serious ethical and regulatory questions. Experts argue that while AI holds promise in speeding up hiring and streamlining HR operations, its misuse could undermine trust and severely damage both employer reputation and applicant safety.

Dr. Laura Perez, a professor of digital ethics at NYU, warns, “If we don’t put safeguards in place now, we risk eroding the legitimacy of the hiring process itself. AI should enhance transparency and fairness — not be used as a tool of deception.”

While some platforms like LinkedIn and Indeed have updated their terms of service to crack down on AI misuse, enforcement remains challenging. Many scams originate overseas or use anonymizing software to mask digital footprints. Experts urge job seekers to remain vigilant by verifying recruiter identities, avoiding interviews through unofficial platforms, and never submitting sensitive data without confirmation of legitimacy.

As AI continues to shape the future of work, both companies and applicants must adapt — but also stay alert. In the evolving digital job market, it’s no longer just about qualifications. It’s about whether the person on the other side of the screen is real.